Hello all,

I’ve just uploaded binaries for a new version of Mitsuba. This release and the next few ones will focus on catching up with a couple of more production-centric features. This time it’s motion blur — the main additions are:

Moving light sources

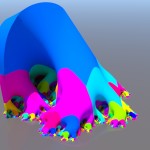

The first image below demonstrates a moving point light source in a partially metallic Cornell box moving along a Rose curve. Because it’s rendered with a bidirectional path tracer, it is possible to see the actual light source. Due to the defocus blur of the camera, the point light shows up as a ribbon instead of just a curve.

This can be fairly useful even when rendering static scenes, since it enables building things like linear light sources that Mitsuba doesn’t natively implement.

Animating a light source is as simple as replacing its toWorld transformation

<transform name="toWorld"> ... emitter-to-world transformation ... </transform>

with the following new syntax

<animation name="toWorld">

<transform time="0">

... transformation at time 0 ...

</transform>

<transform time="1">

... transformation at time 1 ...

</transform>

</animation>

Mitsuba uses linear interpolation for scaling and translation and spherical linear interpolation for rotation. Higher-order interpolation or more detailed animations can be approximated by simply providing multiple linear segments per frame.

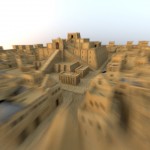

Moving sensors

Moving sensors work exactly the same way; an example is shown below. All new animation features also provide interactive visualizations in the graphical user interface (medieval scene courtesy of Johnathan Good).

- Rendering

- Interactive preview

Objects undergoing linear motion

Objects can be animated with the same syntax, but this is currently restricted to linear motion. I wanted to include support for nonlinear deformations in this release, but since it took longer than expected, it will have to wait until the next version.

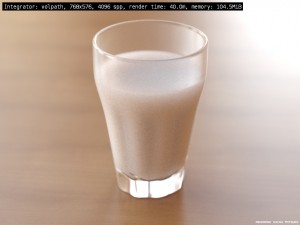

Render-time annotations

The ldrfilm and hdrfilm now support render-time annotations to facilitate record keeping. Annotations are used to embed useful information inside a rendered image so that this information is later available to anyone viewing the image. They can either be placed into the image metadata (i.e. without disturbing the rendered image) or “baked” into the image as a visible label. Various keywords can be used to collect all relevant information, e.g.:

<string name="label[10, 10]" value="Integrator: $integrator['type'], $film['width']x$film['height'], $sampler['sampleCount'] spp, render time: $scene['renderTime'], memory: $scene['memUsage']"/>

Providing the above parameter to hdrfilm has the following result:

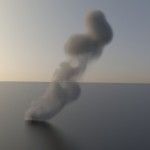

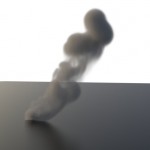

Hiding directly visible emitters

Several rendering algorithms in Mitsuba now have a feature to hide directly visible light sources (e.g. environment maps or area lights). While not particularly realistic, this feature is convenient for removing a background from a rendering so that it can be pasted into a differently-colored document. Together with an improved alpha channel computation for participating media, things like the following are now possible:

- Original image

- Composited onto a white background

Improved instancing

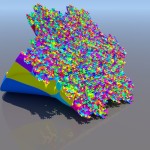

While Mitsuba has supported instancing for a while, there were still a few lurking corner-cases that could potentially cause problems. In a recent paper on developing fractal surfaces in three dimensions, Geoffrey Irving and Henry Segerman used Mitsuba to render heavy scenes (100s of millions of triangles) by instancing repeated substructures— this was a good incentive to fix the problems once and for all. The revamped instancing plugin now also supports non-rigid transformations. The two renderings from the paper shown below illustrate a 2D Gospel curve developed up to level 5 along the third dimension:

- Top view

- Bottom view

Miscellaneous

-

Threading on Windows: Edgar Velázquez-Armendáriz fixed the thread local storage (TLS) implementation and a related race condition that previously caused occasional deadlocks and crashes on Windows

-

Caching: Edgar added a caching mechanism to the serialized plugin, which significantly accelerates the very common case where many shapes are loaded from the same file in sequence.

-

File dialogs: Edgar upgraded most of the “File/Open”-style dialogs in Mitsuba so that they use the native file browser on Windows (the generic dialog provided by Qt is rather ugly)

-

Python: The Python bindings are now much easier to load on OSX. Also, in the new release, further Mitsuba core functions are exposed.

-

Blender interaction: Fixed a issue where GUI tabs containing scenes created in Blender could not be cloned

-

Non-uniform scales: All triangle mesh-based shapes now permit non-uniform scales

-

NaNs and friends: Increased resilience against various numerical corner cases

-

Index-matched participating media: Fixed an unfortunate regression in volpath regarding index-matched media that was accidentally introduced in 0.4.2

-

roughdiffuse: Fixed texturing support in the roughdiffuse plugin

-

Photon mapping: Fixed some inaccuracies involving participating media when rendered by the photon mapper and the Beam Radiance Estimate

-

Conductors: Switched Fresnel reflectance computations for conductors to the exact expressions predicted by geometric optics (an approximation was previously used)

-

New cube shape: Added a cube shape plugin for convenience. This does exactly what one would expect.

-

The rest: As usual, a large number of smaller bugfixes and improvements were below the threshold and are thus not listed individually—the repository log has more details.

Where to get it

The documentation was updated as well and has now grown to over 230 pages. Get it here.

The new release is available on the download page

Great!! Work fine in Widows XP SP3 32 bits

Ups… Windows*

Wenzel, you’re a genius! Thanks so much for the Cube primitive.

No time to play with the other additions – I must finish GridVolume! [super excited]

I’ll upload a video as soon as its integrated.

oops….mistyped my name there.

How long did that bi-directional image take with the moving point light?

half an hour or so? I wanted it to be noise-free.

Great !

Hi Wenzel, great work!

I bet you have seen this recent paper http://cg.ibds.kit.edu/PSR.php

What you think about it? Are you going to implement it, or something similar?

Keep rocking

Hi,

looks interesting, but I currently don’t plan to implement it. This being an open source project, I’m always open for outside contributions though.

Wenzel

I just remembered, there is still the Hair update coming too. Exciting times for sure.

great work! danke :p

i just upgraded to 0.4.2 and now awesome update is here.

its amazing how you call such feature realease just a minor release.

its a good thing the blender exporter is slowly catching up (dipole works). i hope i get these new features in the exporter.

btw will you be posting this news at blendernation and blenderartist?

ok and i love your renders.

oh looks like you have already posted in the blenderartist plugin page.

i gonna try nudging bart to post this on blendernation

I already posted an announcement at si-community and cgtalk, so those are already covered.

excellent news

Thanks for the good work …

saludos

Hi Wenzel, what you mean with Motion Blur “is currently restricted to linear motion. I wanted to include support for nonlinear deformations in this release, but..”, are you referring to deformation MB for next release? I can’t get what “non-linear” MB is, since you already implemented motion blur along curve movements.

Thanks

That’s right — it’s deformation motion such as an exploding object or a squishy ball bouncing around.

Currently, you can approximate nonlinear motion at a macro-scale (via multiple linear segment), but it works at the object-level and is thus too “coarse” for something like fine-scale deformations.

@Wenzel – Wow, thinking that you are on your way to implement deformation MB is kinda “scary” I knew it was such an hard topic. Congrats, can’t wait for it then! Do you have a public roadmap for Mitsuba?

I knew it was such an hard topic. Congrats, can’t wait for it then! Do you have a public roadmap for Mitsuba?

Not currently, no. I fear I’m way too unorganized to have one without it being a joke

Where did the “hair” repo go?

It’s still there, just hidden (since it is not yet open sourced). But it hopefully will be soon.

Another thing, compared to other render engines, our info stamp is HUGE. It’s not just that it’s huge, its also not alpha blended, so it’s kinda obstructive the smaller the render image is.

Any thoughts?

Actually, the lack of alpha blending was intentional. It can be very hard to read the text on arbitrary background.

Yes, you’re correct about readability.

Work has been kicking my butt lately, so I havent had much time to code on Mits. I’m still working on getting that GridVolume code out the door.

Point and spot light don’t work on cbox scene.

The scene is totally black if I setup a light of these kind at eye position.

In Mitsuba, point and spot lights have an inverse square falloff, and the cornell box scene is “big” (~400 units). So you probably just need to set the intensity to something very high on the order of 400^2=160000 to cancel out the falloff.