Mitsuba was used to render the results and illustrations in several technical papers that have just received their final acceptance to SIGGRAPH 2012. The ones that I know of are listed below (if I missed yours, please let me know!)

- Manifold Exploration: A Markov Chain Monte Carlo technique for rendering scenes with difficult specular transport (by Wenzel Jakob and Steve Marschner)

Abstract: It is a long-standing problem in unbiased Monte Carlo methods for rendering that certain difficult types of light transport paths, particularly those involving viewing and illumination along paths containing specular or glossy surfaces, cause unusably slow convergence. In this paper we introduce Manifold Exploration, a new way of handling specular paths in rendering. It is based on the idea that sets of paths contributing to the image naturally form manifolds in path space, which can be explored locally by a simple equation-solving iteration. This paper shows how to formulate and solve the required equations using only geometric information that is already generally available in ray tracing systems, and how to use this method in in two different Markov Chain Monte Carlo frameworks to accurately compute illumination from general families of paths. The resulting rendering algorithms handle specular, near-specular, glossy, and diffuse surface interactions as well as isotropic or highly anisotropic volume scattering interactions, all using the same fundamental algorithm. An implementation is demonstrated on a range of challenging scenes and evaluated against previous methods.

Gallery: the following images are courtesy of Wenzel Jakob and Steve Marschner. The interior scene was designed by Olesya Isaenko.

- Exterior-lit room; the glass egg contains an anisotropic medium

- Tableware with complex specular transport, lit by the chandelier

- A brass chandelier with 24 glass-enclosed bulbs

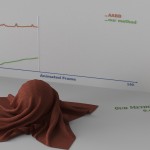

- Structure-aware Synthesis for Predictive Woven Fabric Appearance (by Shuang Zhao, Wenzel Jakob, Steve Marschner, and Kavita Bala)

Abstract: Abstract: Woven fabrics have a wide range of appearance determined by their small-scale 3D structure. Accurately modeling this structural detail can produce highly realistic renderings of fabrics and is critical for predictive rendering of fabric appearance. But building these yarn-level volumetric models is challenging. Procedural techniques are manually intensive, and fail to capture the naturally arising irregularities which contribute significantly to the overall appearance of cloth. Techniques that acquire the detailed 3D structure of real fabric samples are constrained only to model the scanned samples and cannot represent different fabric designs.

This paper presents a new approach to creating volumetric models of woven cloth, which starts with user-specified fabric designs and produces models that correctly capture the yarn-level structural details of cloth. We create a small database of volumetric exemplars by scanning fabric samples with simple weave structures. To build an output model, our method synthesizes a new volume by copying data from the exemplars at each yarn crossing to match a weave pattern that specifies the desired output structure. Our results demonstrate that our approach generalizes well to complex designs and can produce highly realistic results at both large and small scales.

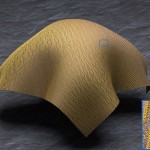

Gallery: the following images are courtesy of Shuang Zhao, Wenzel Jakob, Steve Marschner, and Kavita Bala.

- A range of fabrics with complex weave patterns, synthesized from a library of Micro-CT scans

- Fabric made using a large (96×96) wavy twill weave pattern

- A Jacquard brocade pillow made using satin and twill weaves

Video:

- Stitch Meshes for Modeling Knitted Clothing with Yarn-level Detail (by Cem Yuskel, Jonathan Kaldor, Doug L. James, and Steve Marschner)

Abstract:Recent yarn-based simulation techniques permit realistic and efficient dynamic simulation of knitted clothing, but producing the required yarn-level models remains a challenge. The lack of practical modeling techniques significantly limits the diversity and complexity of knitted garments that can be simulated. We propose a new modeling technique that builds yarn-level models of complex knitted garments for virtual characters. We start with a polygonal model that represents the large-scale surface of the knitted cloth. Using this mesh as an input, our interactive modeling tool produces a finer mesh representing the layout of stitches in the garment, which we call the stitch mesh. By manipulating this mesh and assigning stitch types to its faces, the user can replicate a variety of complicated knitting patterns. The curve model representing the yarn is generated from the stitch mesh, then the final shape is computed by a yarn-level physical simulation that locally relaxes the yarn into realistic shape while preserving global shape of the garment and avoiding “yarn pull-through,” thereby producing valid yarn geometry suitable for dynamic simulation. Using our system, we can efficiently create yarn-level models of knitted clothing with a rich variety of patterns that would be completely impractical to model using traditional techniques. We show a variety of example knitting patterns and full-scale garments produced using our system.

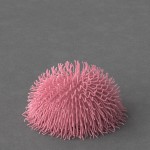

Gallery: the following images are courtesy of Cem Yuksel. Some of the models are by Christer Sveen, Rune Spaans, and Alexander Tomchuk.

- Alien wearing a sweater with the Braid Cables pattern

- Knitted dress on a mannequin

- Knitted glove with a Ribbing pattern formed by alternating knit and purl stitches

- Knitted poncho with a Ribbing pattern, simulated on a mannequin

- A knitted sweater dress for a sheep with Ridged Feather pattern

- Knitted tea cozy with a Stockinette pattern formed by repeated knit stitches

Video:

- Energy-based Self-Collision Culling for Arbitrary Mesh Deformations (by Changxi Zheng and Doug L. James)

Abstract:

In this paper, we accelerate self-collision detection (SCD) for a deforming triangle mesh by exploiting the idea that a mesh cannot self collide unless it deforms enough. Unlike prior work on subspace self-collision culling which is restricted to low-rank deformation subspaces, our energy-based approach supports arbitrary mesh deformations while still being fast. Given a bounding volume hierarchy (BVH) for a triangle mesh, we precompute Energy-based Self-Collision Culling (ESCC) certificates on bounding-volume-related sub-meshes which indicate the amount of deformation energy required for it to self collide. After updating energy values at runtime, many bounding-volume self-collision queries can be culled using the ESCC certificates. We propose an affine-frame Laplacian-based energy definition which sports a highly optimized certificate preprocess, and fast runtime energy evaluation. The latter is performed hierarchically to amortize Laplacian energy and affine-frame estimation computations. ESCC supports both discrete and continuous SCD, detailed and nonsmooth geometry. We demonstrate significant culling on various examples, with SCD speed-ups up to 26x.Gallery: the following images are courtesy of Chanxi Zheng and Doug L. James.

Video:

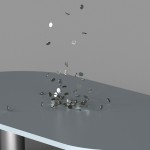

- Precomputed Acceleration Noise for Improved Rigid-body Sound (by Jeff Chadwick, Changxi Zheng and Doug L. James)

Abstract:

We introduce an efficient method for synthesizing acceleration noise—sound produced when an object experiences abrupt rigid body acceleration due to collisions or other contact events. We approach this in two main steps. First, we estimate continuous contact force profiles from rigid-body impulses using a simple model based on Hertz contact theory. Next, we compute solutions to the acoustic wave equation due to short acceleration pulses in each rigid-body degree of freedom. We introduce an efficient representation for these solutions—Precomputed Acceleration Noise—which allows us to accurately estimate sound due to arbitrary rigid-body accelerations. We find that the addition of acceleration noise significantly complements the standard modal sound algorithm, especially for small objectsGallery: the following images are courtesy of Jeff Chadwick, Chanxi Zheng and Doug L. James.