Hello all,

much has happened since the last version of Mitsuba, so I figured it’s time for a new official release! I’m happy that were quite a few external contributions this time.

The new features of version 0.5.0 are:

-

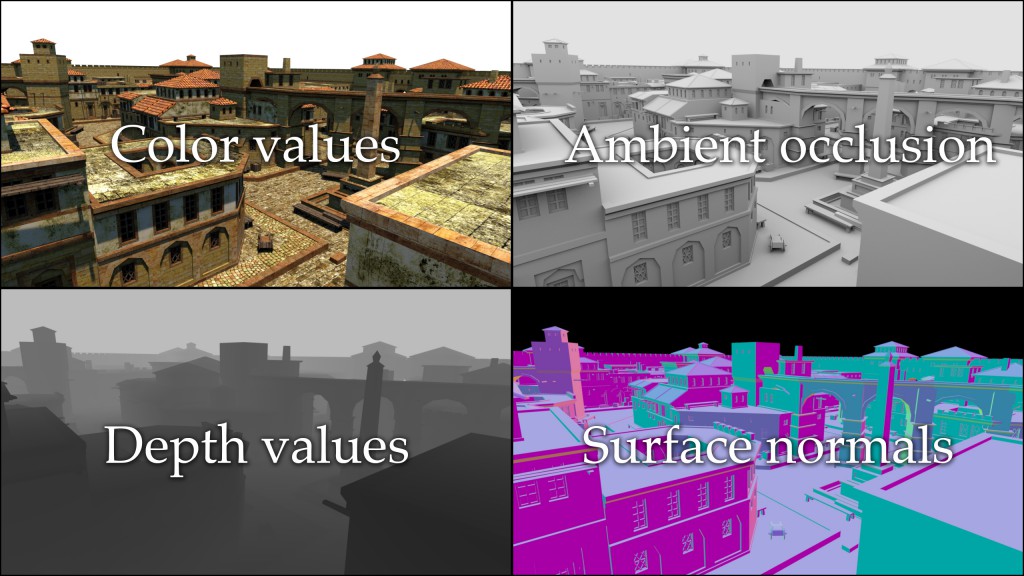

Multichannel renderings:

Mitsuba can now perform renderings of images with multiple channels—these can contain the result of traditional rendering algorithms or extracted information of visible surfaces (e.g. surface normals or depth). All computation happens in one pass, and the output is written to a dense or tiled multi-channel EXR file. This feature should be quite useful to computer vision researchers who often need synthetic ground truth data to test their algorithms. Refer to the multichannel plugin in the documentation for an example.

-

Python integration: Following in the footsteps of previous versions, this release contains many improvements to the Python language bindings. They are now suitable for building quite complex Python-based applications on top of Mitsuba, ranging from advanced scripted rendering workflows to full-blown visual material editors. The Python chapter of the documentation has been updated with many new recipes that show how to harness this functionality. The new features include

-

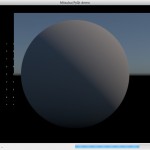

PyQt/PySide integration: It is now possible to fully control a rendering process and display partial results using an user interface written in Python. I’m really excited about this feature myself because it will free me from having to write project-specific user interfaces using C++ in the future. With the help of Python, it’s simple and fast to whip up custom GUIs to control certain aspects of a rendering (e.g. material parameters).

The documentation includes a short example that instantiates and renders a scene while visually showing the progress and partial blocks being rendered. Due to a very helpful feature of Python called buffer objects, it was possible to implement communication between Mitsuba and Qt in such a way that the user interface directly accesses the image data in Mitsuba’s internal memory without the overhead of costly copy operations.

-

Scripted triangle mesh construction: The internal representation of triangle shapes can now be accessed and modified via the Python API—the documentation contains a recipe that shows how to instantiate a simple mesh. Note that this is potentially quite slow when creating big meshes due to the interpreted nature of Python.

-

Blender Python integration: An unfortunate aspect of Python-based rendering plugins for Blender is the poor performance when exporting geometry (this is related to the last point). It’s simply not a good idea to run interpreted code that might have to iterate over millions of vertices and triangles. Mitsuba 0.5.0 also lays the groundwork for future rendering plugin improvements using two new features: after loading the Mitsuba Python bindings into the Blender process, Mitsuba can directly access Blender’s internal geometry data structures without having to go through the interpreter. Secondly, a rendered image can be passed straight to Blender without having to write an intermediate image file to disk. I look forward to see these features integrated into the MtsBlend plugin, where I expect that they will improve the performance noticeably.

-

NumPy integration: Nowadays, many people use NumPy and SciPy to process their scientific data. I’ve added initial support to facilitate NumPy computations involving image data. In particular, Mitsuba bitmaps can now be accessed as if they were NumPy arrays when the Mitsuba Python bindings are loaded into the interpreter.

For those who prefer to run Mitsuba as an external process but still want to use NumPy for data processing, Joe Kider contributed a new feature for the mfilm plugin to write out binary NumPy files. These are much more compact and faster to load compared to the standard ASCII output of mfilm.

-

Python 3.3 is now consistently supported on all platforms

-

On OSX, the Mitsuba Python bindings can now also be used with non-Apple Python binaries (previously, doing so would result in segmentation faults).

-

- GUI Improvements:

-

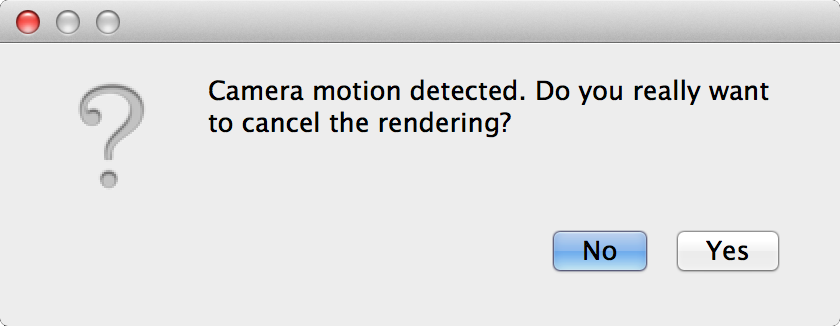

Termination of rendering jobs: This has probably happened to every seasoned user of Mitsuba at some point: an accidental click/drag into the window stops a long-running rendering job, destroying all progress made so far. The renderer now asks for confirmation if the job has been running for more than a few seconds.

-

Switching between tabs: The Alt-Left and Alt-Right have been set up to cycle through the open tabs for convenient visual comparisons between images.

-

Fewer clicks in the render settings: Anton Kaplanyan contributed a patch that makes all render settings fields directly editable without having to double click on them, saving a lot of unnecessary clicks.

-

-

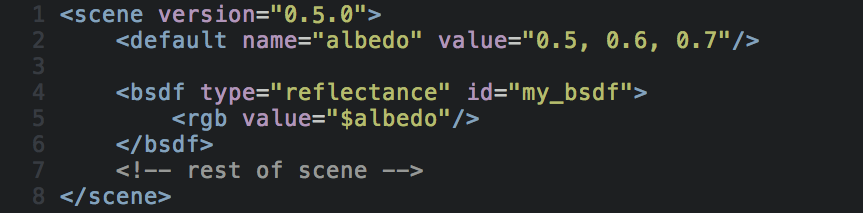

New default tag: One feature that has been available in Mitsuba since the early days was the ability to leave some scene parameters unspecified in the XML description and supply them via the command line (e.g. the albedo parameter in the following snippet). This is convenient but has always had the critical drawback that loading fails with an error message when the parameter is not explicitly specified. Mitsuba 0.5.0 adds a new XML tag named default that denotes a fallback value when the parameter is not given on the command line. The following example illustrates how to use it:

-

Windows 8.x compatibility: In a prior blog post, I complained about running into serious OpenGL problems on Windows 8. I have to apologize since it looks like I was the one to blame: Microsoft’s implementation is fine, and it was in fact a bug in my own code that was causing the issues. With that addressed in version 0.5.0, Mitsuba now also works on Windows 8.

-

CMake build system: The last release shipped with an all-new set of dependency libraries, and since then the CMake build system was broken to the point of being completely unusable. Edgar Velázquez-Armendáriz tracked down all issues and submitted a big set of patches that make CMake builds functional again.

-

Other bugfixes and improvements: Edgar Velázquez-Armendáriz fixed an issue in the initialization code that could lead to crashes when starting Mitsuba on Windows

The command line server executable mtssrv was inconvenient to use on Mac OS X because it terminated after any kind of error instead of handling it gracefully. The behavior was changed to match the other platforms.

A previous release contained a fix for an issue in the thin dielectric material model. Unfortunately, I did not apply the correction to all affected parts of the plugin back then. I’ve since then fixed this and also compared the model against explicitly path traced layers to ensure a correct implementation.

Anton Kaplanyan contributed several MLT-related robustness improvements.

Anton also contributed a patch that resets all statistics counters before starting a new rendering, which is useful when batch processing several scenes or when using the user interface.

Jens Olsson reported some pitfalls in the XML scene description language that could lead to inconsistencies between renderings done in RGB and spectral mode. To address this, the behavior of the intent attribute and spectrum tag (for constant spectra) was slightly adapted. This only affects users doing spectral renderings, in which case, you may want to take a look at Section 6.1.3 of the new documentation and the associated entry on the bug tracker.

I’d also like to announce two new efforts to develop plugins that integrate Mitsuba into modeling applications:

-

Rhino plugin: TDM Solutions SL published the first version of an open source Mitsuba plugin for Rhino 3D and Rhino Gold. The repository of this new plugin can be found on GitHub, and there is also a Rhino 3D group page with binaries and documentation. It is based on an exporter I wrote a long time ago but adds a complete user interface with preliminary material support. I’m excited to see where this will go!

-

Maya plugin: Jens Olsson from the Volvo Car Corporation contributed the beginnings of a new Mitsuba integration plugin for Maya. Currently, the plugin exports geometry to Mitsuba’s .serialized format but still requires manual XML input to specify materials. Nonetheless, this should be quite helpful for Mitsuba users who model using Maya. The source code is located in the mitsuba-maya repository and prebuilt binaries are here.

Getting the new version

Precompiled binaries for many different operating systems are available on the download page. The updated documentation file (249 pages) with coverage of all new features is here (~36 MB), and a lower resolution version is also available here (~6MB).

By the way: a little birdie told me that Mitsuba has been used in a bunch SIGGRAPH submissions this year. If all goes well, you can look forward to some truly exciting new features!