I am excited to announce version 0.4.0 of Mitsuba! This release represents about two years of development that have been going on in various side-branches of the codebase and have finally been merged.

The change list is extensive: almost all parts of the renderer were modified in some way; the source code diff alone totals over 5MB of text (even after excluding tabular data etc). This will likely cause some porting headaches for those who have a codebase that builds on Mitsuba, and I apologize for that.

Now for the good part: it’s a major step in the development of this project. Most parts of the renderer were redesigned and feature cleaner interfaces, improved robustness and usability (and in many cases better performance). Feature-wise, the most significant change is that the renderer now ships with several state-of-the-art bidirectional rendering techniques. The documentation has achieved coverage of many previously undocumented parts of the renderer and can be considered close to complete. In particular, the plugins now have 100% coverage. Hooray!

Please read on for a detailed list of changes:

User interface

Here is a short video summary of the GUI-specific changes:

Please excuse the jarring transitions :). In practice, the preview is quite a bit snappier — in the video, it’s runs at about half speed due to my recording application fighting against Mitsuba over who gets to have the GPU. To recap, the main user interface changes were:

-

Realtime preview: the VPL-based realtime preview was given a thorough overhaul. As a result, the new preview is faster and produces more accurate output. Together with the redesigned sensors, it is also able to simulate out-of-focus blur directly in the preview.

-

Improved compatibility: The preview now even works on graphics cards with genuinely terrible OpenGL support.

-

Crop/Zoom support: The graphical user interface contains an interactive crop tool that can be used to render only a part of an image and optionally magnify it.

-

Multiple sensors: A scene may now also contain multiple sensors, and it is possible to dynamically switch between them from within the user interface.

Bidirectional rendering algorithms

Mitsuba 0.4.0 ships with a whole batch of bidirectional rendering methods:

-

Bidirectional Path Tracing (BDPT) by Veach and Guibas is an algorithm that works particularly well on interior scenes and often produces noticeable improvements over plain (i.e. unidirectional) path tracing. BDPT renders images by simultaneously tracing partial lights path from the sensor and the emitter and attempting to establish connections between the two.

The new Mitsuba implementation is a complete reproduction of the original method, which handles all sampling strategies described by Veach. The individual strategies are combined using Multiple Importance Sampling (MIS). A demonstration on a classic scene by Veach is shown below; in the images, s and t denote the number of sampling events from the light and eye direction, respectively. The number of pixel samples is set to 32 so that the difference in convergence is clearly visible.

- Listing of all individual sampling strategies used by BDPT. Many are too noisy to be used in this form…

- A classic test scene by Veach, rendered using plain path tracing.

- The final image produced using BDPT

- … but after re-weighting them using Multiple Importance Sampling, poor samples are discarded.

-

Path Space Metropolis Light Transport (MLT) is a seminal rendering technique proposed by Veach and Guibas, which applies the Metropolis-Hastings algorithm to the path-space formulation of light transport.

In contrast to simple methods like path tracing that render images by performing a naïve and memoryless random search for light paths, MLT actively searches for relevant light paths. Once such a path is found, the algorithm tries to explore neighboring paths to amortize the cost of the search. This is done with a clever set of path perturbations which can efficiently explore certain classes of paths. This method can often significantly improve the convergence rate of renderings that involve what one might call “difficult” input.

To my knowledge, this is the first publicly available implementation of this algorithm that works correctly.

-

Primary Sample Space Metropolis Light Transport (PSSMLT) by Kelemen et al. is a simplified version of the above algorithm.

Like MLT, this method relies on Markov Chain Monte Carlo integration, and it systematically explores the space of light paths, searching with preference for those that carry a significant amount of energy from an emitter to the sensor. The main difference is that PSSMLT does this exploration by piggybacking on another rendering technique and “manipulating” the random number stream that drives it. The Mitsuba version can operate either on top a unidirectional path tracer or a fully-fledged bidirectional path tracer with multiple importance sampling. This is a nice method to use when a scene is a little bit too difficult for a bidirectional path tracer to handle, in which case the extra adaptiveness due PSSMLT can bring it back into the realm of things that can be rendered within a reasonable amount of time.This is the algorithm that’s widely implemented in commercial rendering packages that mention “Metropolis Light Transport” somewhere in their product description.

-

Energy redistribution path tracing by Cline et al. combines aspects of Path Tracing with the exploration strategies of Veach and Guibas. This method generates a large number of paths using a standard path tracing method, which are then used to seed a MLT-style renderer. It works hand in and with the next method:

- Manifold Exploration by Jakob and Marschner is based on the idea that sets of paths contributing to the image naturally form manifolds in path space, which can be explored locally by a simple equation-solving iteration. This leads to a method that can render scenes involving complex specular and near-specular paths, which have traditionally been a source of difficulty in unbiased methods. The following renderings images (scene courtesy of Olesya Isaenko) were created with this method:

- Exterior-lit room; the glass egg contains an anisotropic medium

- Tableware with complex specular transport, lit by the chandelier

- A brass chandelier with 24 glass-enclosed bulbs

Developing these kinds of algorithms can be quite tricky because of the sheer number of corner cases that tend to occur in any actual implementation. To limit these complexities and enable compact code, Mitsuba relies on a bidirectional abstraction library (libmitsuba-bidir.so) that exposes the entire renderer in terms of generalized vertex and edge objects. As a consequence, these new algorithms “just work” with every part of Mitsuba, including the shapes, sensors, and emitters, surface scattering models, and participating media. As a small caveat, there are a few remaining non-reciprocal BRDFs and Dipole-style subsurface integrators that don’t yet interoperate, but this will be addressed in a future release.

Bitmaps and Textures

The part of the renderer that deals with bitmaps and textures was redesigned from scratch, resulting in many improvements:

-

Out-of-core textures: Mitsuba can now comfortably work with textures that exceed the available system memory.

-

Blocked OpenEXR files: Mitsuba can write blocked images, which is useful when the image to be rendered is too large to fit into system memory.

-

Filtering: The quality of filtered texture lookups has improved considerably and is now up to par with mature systems designed for this purpose (e.g. OpenImageIO).

-

MIP map construction: now handles non-power-of-two images efficiently and performs a high-quality Lanczos resampling step to generate lower-resolution MIP levels, where a box filter was previously used. Due to optimizations of the resampling code, this is surprisingly faster than the old scheme!

-

Conversion between internal image formats: costly operations like “convert this spectral double precision image to an sRGB 8 bit image” occur frequently during the input and output phases of rendering. These are now much faster due to some template magic that generates optimized code for any conceivable kind of conversion.

-

Flexible bitmap I/O: the new bitmap I/O layer can read and write luminance, RGB, XYZ, and spectral images (each with or without an alpha channel), as well as images with an arbitrary number of channels. In the future, it will be possible to add custom rendering plugins that generate multiple kinds of types of output data (i.e. things other than radiance) in a single pass.

Sample generation

This summer, I had the fortune of working for Weta Digital. Leo Grünschloß from the rendering R&D group quickly had me convinced about all of the benefits of Quasi Monte-Carlo point sets. Since he makes his sample generation code available, there was really no excuse not to include this as plugins in the new release. Thanks, Leo!

-

sobol: A fast random-access Sobol sequence generator using the direction numbers by Joe and Kuo.

-

halton & hammersley: These implement the classic Halton and Hammersley sequences with various types of scrambling (including Faure permutations)

Apart from producing renderings with less noise, these can also used to make a rendering process completely deterministic. When used together with tiling-based rendering techniques (such as the path tracer), these plugins use an enumeration technique (Enumerating Quasi-Monte Carlo Point Sequences in Elementary Intervals by Grünschloß et al.) to find the points within each tile.

Sensors and emitters (a.k.a. cameras and light sources)

The part of Mitsuba that deals with cameras and light sources was rewritten from scratch, which was necessary for clean interoperability with the new integrators. To convey the magnitude of these modifications, cameras are now referred to sensors, and luminaires have become emitters. This terminology change also reflects the considerably wider range of plugins to perform general measurements, rendering an image being a special case. For example, the following sensors are available:

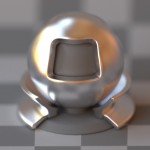

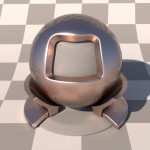

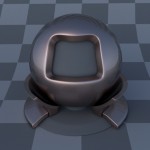

- Perspective pinhole and orthographic sensor: these are the same as always and create tack sharp images (demonstrated on the Cornell box and the material test object).

-

Perspective thin lens and telecentric lens sensor: these can be thought of as “fuzzy” versions of the above. They focus on a planar surface and blur everything else.

Lens nerd alert: the telecentric lens sensor is particularly fun/wacky! Although it provides an orthographic view, it can “see” the walls of the Cornell box due to defocus blur

- Spherical sensor: a point sensor, which creates a spherical image in latitude-longitude format.

-

Irradiance sensor: this is the dual of an area light. It can be attached to any surface in the scene to record the arriving irradiance and “renders” a single number rather than an image.

-

Fluence sensor: this is the dual of a point light source. It can be placed anywhere in the scene and measures the average radiance passing through that point.

-

Radiance sensor: this is the dual of a collimated beam. It records the radiance passing through a certain point from a certain direction.

The emitters are mostly the same (though, built using the new interface). The main changes are:

-

Environment emitter: the new version of this plugin implements slightly better importance sampling, and it supports filtered texture lookups.

-

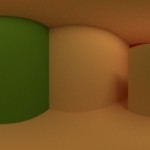

Skylight emitter: The old version of this plugin used to implement the Preetham model, which suffered from a range of numerical and accuracy-related problems. The new version is based on the recent TOG paper An Analytic Model for Full Spectral Sky-Dome Radiance by Lukáš Hošek and Alexander Wilkie. The sun model has also been updated for compatibility. Together, these two plugins can be used to render scenes under spectral daylight illumination, using proper physical units (i.e. radiance values have units of W/(m^2 ⋅ sr ⋅ nm)). The sky configuration is found from the viewing position on the earth and the desired date and time, and this computation is now considerably more accurate. This may be useful for architectural or computer vision applications that need to reproduce approximate lighting conditions on a certain time and date (the main caveat being that these plugins do not know anything about the weather).

- The “sunsky” emitter combines these two.

- The same object lit by the sun only.

- A coated copper object under skylight illumination.

When rendering with bidirectional rendering algorithms, sensors and emitters are now interpreted quite strictly to ensure correct output. For instance, cameras that have a non-infinitesimal aperture are represented as actual objects in the scene that collect illumination—in other words: a thin lens sensor facing a mirror will see itself as a small 100% absorbing disc. Point lights are what they really are (i.e. bright points floating in space.) I may work on making some of this behavior optional in future releases, as it can be counter-intuitive when used for artistic purposes.

Other notable extensions and bugfixes:

- The revamped mfilm supports writing Mathematica & MATLAB-compatible output.

- The new crash reporter on OSX

- Blending between gold and rough black plastic using a texture

-

obj: The Wavefront OBJ loader now supports complex meshes that reference many different materials. These are automatically imported from a mtl file if present and can individually be overwritten with more specialized Mitsuba-specific materials.

-

thindielectric: a new BSDF that models refraction and reflection from a thin dielectric material (e.g. a Glass window). It should be used when two refraction events are modeled using a single sheet of geometry.

-

blendbsdf: a new BSDF that interpolates between two nested BSDFs based on a texture.

-

mfilm: now supports generating both MATLAB and Mathematica-compatible output.

-

hdrfilm: replaces the exrfilm plugin. This new film plugin can write both OpenEXR, Ward-style RGBE, and PFM images.

-

ldrfilm: replaces the pngfilm plugin. The new film writes PNG and JPEG images with adjustable output channels and compression. It applies Gamma correction and, optionally, the photographic tonemapping algorithm by Reinhard et al.

-

dipole: the dipole subsurface scattering plugin was completely redesigned. It now features a much faster tree construction code, complete SSE acceleration, and it uses blue noise irradiance sample points.

- The same scene rendered with a different material density

- XYZRGB Dragon model rendered using the new dipole implementation

-

Handedness, part 2: this is a somewhat embarrassing addendum to an almost-forgotten bug. Curiously, old versions of Mitsuba had two handedness issues that canceled each other out—after fixing one of them in 0.3.0, all cameras became left-handed! This is now fixed for good, and nobody (myself, in particular) is allowed to touch this code from now on!

-

Batch tonemapper: the command-line batch tonemapper (accessible via mtsutil) has been extended with additional operations (cropping, resampling, color balancing), and it can process multiple images in parallel.

-

Animation readyness: one important aspect of the redesign was to make every part of the renderer animation-capable. While there is no public file format to load/describe the actual animations yet, it will be a straightforward addition in a future 0.4.x release.

-

Build dependencies: Windows and Mac OS builds now ship with all dependencies except for SCons and Mercurial (in particular, Qt is included). The binaries were recompiled so that they rely on a consistent set of runtime support libraries. This will hopefully end build difficulties on these platforms once and forever.

Note: The entire process for downloading the dependencies and compiling Mitsuba has changed a little. Please be sure to review the documentation. -

CMake build system: Edgar Velázquez-Armendáriz has kindly contributed a CMake-based build system for Mitsuba. It essentially does the same thing as the SCons build system except that it is generally quite a bit faster. For now, it is still considered experimental and provided as a convenience for experienced users who prefer to use CMake. Both build systems will be maintained side-by-side in the future.

-

SSE CPU tonemapper: When running Mitsuba through a Virtual Desktop connection on Windows, the OpenGL support is simply too poor to support any kind of GPU preview. In the past, an extremely slow CPU-based fallback was used so that at least some kind of tonemapped image can be shown. Edgar replaced that with optimized SSE2 code from his HDRITools, hence this long-standing resource hog is gone.

-

SSE-accelerated Mersenne Twister: Edgar has also contributed a patch that integrates the SSE-accelerated version of Mersenne Twister by Mutsuo Saito and Makoto Matsumoto, which is about twice as fast as the original code.

-

Multi-python support: some platforms provide multiple incompatible versions of Python (e.g. 2.7 and 3.2). Mitsuba can now build a separate Python integration library for each one.

-

Breakpad integration: Mitsuba will happily crash when given some sorts of invalid input (and occasionally, when given valid input). In the past, it has been frustratingly difficult to track down these crashes, since many users don’t have the right tools to extract backtraces. Starting with this release, official Mitsuba builds on Mac OS and Windows include Google Breakpad, which provides the option to electronically submit a small crash dump file after such a fault. A decent backtrace can then be obtained from this dump file, which will be a tremendous help to debug various crashes.

-

boost::thread: Past versions of Mitsuba have relied on pthreads for multithreading. On Windows, a rather complicated emulation layer was needed to translate between this interface and the very limited native API. Over the years, this situation has improved considerably so that a simpler and cleaner abstraction, boost::thread, has now become a satisfactory replacement on all platforms. Edgar ported the all of the old threading code over to boost.

Compatibility:

There were some changes to plugin names and parameters, hence old scenes will not directly work with 0.4.0. Do not panic: as always, Mitsuba can automatically upgrade your old scenes so that they work with the current release. Occasionally, it just becomes necessary to break compatibility to improve the architecture or internal consistency. Rather than being tied down by old decisions, it is the policy of this project to make such changes while providing a migration path for existing scenes.

When upgrading scenes, please don’t try to do it by hand (e.g. by editing the “version” XML tag). The easiest way to do this automatically is by simply opening an old file using the GUI. It will then ask you if you want to upgrade the scene and do the hard work for you (a backup copy is created just in case). An alternative scriptable approach for those who have a big stash of old scenes is to run the XSLT transformations (in the data/schema directory) using a program like xsltproc.

Documentation

A lot of work has gone into completing the documentation and making it into something that’s fun to read. The images below show a couple of sample pages:

The high resolution reference manual is available for download here: documentation.pdf (a 36MB PDF file), and a low-resolution version is here: documentation_lowres.pdf (6MB). Please let me know if you have any suggestions, or you find a typo somewhere.

Downloads:

To download this release along with set of sample scenes that you can play with, visit the download page. Enjoy!

Wenzel, superb! After some months of waiting in anticipation….its finally here.

Time to test and re-code some exportsers

Congratulations on this milestone once again.

amazing. gotta try. gotta tell the MakeHuman guys

The dipole subsurface is amazing!

Wenzel, a suggestion for the documentation:

why was the readme for “adjustment files” removed, we use it a lot

to dynamically merge material xmls with auto converted collada.

I believe it was a chapter in 0.2 beta documentation pdf?

Just a thought

Hi Yoran,

this mechanism was always kind of unsatisfactory, and I think you are the only ones who are seriously using it.

The future plan is to write proper exporters which directly create the right format. But no worries — I won’t delete the feature. There is just (IMHO) no need to spend pages on it in the documentation.

Wenzel

Great, nice work.

Thank you, Wenzel!

-Reyn

the arch Linux binaries are not x86_64? can you build that?

I’ve uploaded x86_64 binaries for Arch linux.

Extreme! Man Wenzel I was a fan of your work at versions 0.2 … the developments in this release are just cementing that! Very inspiring work!

The best part is your documentation is just so thorough!

Oh another thin: Is it possible to use the materials in node environments? I know that there is no node gui in mitsuba, but if one were to make a Blender plugin to render into mitsuba would it in theory be possible to do the materials in the nodeeditor with the new blendbsdf etc?

sure, you can. Somebody just has to write an exporter that exposes this in a GUI and writes out the proper scene XML format.

But you can already build all kinds of wacky networks (and this was possible even in older versions, but not exposed in the now-defunct 0.3.0 blender plugin).

Wenzel

It’s over my good fortune. Mitsuba 0.4.0 does not work with my Windows XP SP3 32-bit. I tested with these binaries; “Legacy build (for 32 bit AMD processors and older hardware)” and with the new scenes (torus.zip).

I’ve also tried to include the folder “h:\mitsuba4″ in the system path.

I’ve only got an error message;

https://dl.dropbox.com/u/16848591/mitsuba/mitsuba4_fail.png

wow, this is a surprising error message. I will try to see if I can reproduce it somehow.

Which locale are you using? (i.e. what’s the language that is configured in your windows XP). Is it indeed English, as the screenshot suggests?

this issue has been fixed — it should work in 0.4.1.

i downloaded the windows 64bit version, but i can`t import obj files, also cannot open sample scenes.

when importing obj, it gives me an error msg

“Error while loading plugin “C:\temp\mitsuba 0.4.0\plugins/envmap.dll: the specified module could not be found”

maybe it`s just the syntax that messes things up? cause before envmap.dll there is a slash instead of a backslash in the error msg/

I will issue a fix shortly. For the meantime: I’ve been getting reports that the legacy (32 bit) version works.

A fixed release is now available.

Dear Jakob, your work is speechless! Thanks for every single moment and effort spent on it! The documentation equals the quality and completeness of the engine.

Thanks again!

Unfortunately i am getting errors on windows 7 x64, like the one allready in your bug report page.

Fail to load envmap.dll and/or bitmap.dll.

Previus Mitsuba 0.3.1 works fine.

I’ve uploaded new binaries that fix this. Can you please download the renderer once more and try again?

Yap it works perfectly now!

I really like your work Wenzel, if i get the time ill write an adjustment file exporter for 3ds max to be able to export materials,cameras,lights to accompany the obj export.

Since .obj will export all meshes and uv coords it will be much easier and quicker than LuxMax.

Loading some scenes under Windows 7 64 bit rises exceptions on plugin load routines for example trying to load matpreview.xml rises an error message box like:

2012-10-02 15:00:00 ERROR load [plugin.cpp:72] Error while loading plugin “C:\Program Files\Mitsuba 0.4.0\plugins/envmap.dll”: The specified module could not be found.

although the file envmap.dll is in the proper location searched by Mitsuba.

Thanks, Riccardo.

Hey — this should be fixed now. Can you please re-download and try again?

Fantastic work!!!

hope some popular 3d software plug-in if possible.

Apart from the mentioned problems when loading plugins (like envmap.dll or sphere.dll) on Windows 7 x64, when trying to test one of the new integrators (I can’t remember which one, and can’t try right now) I got a message saying that Mitsuba crashed (even though it was happily finishing the scene). The message said I could send a report if clicking “OK”, and so I did, but I didn’t get any confirmation that the report was sent correctly. Should it have appeared, or actually there’s no notification? If so, it would be a nice addition.

Thanks for sharing your hard work.

I’m working on DLL issue. On windows, there is currently no confirmation after a crash submission, but that might indeed be nice to have. I’ve been receiving a whole bunch and will start to review them soon.

I’ve uploaded new binaries that fix the DLL issue. Can you please try again?

Is there any chance we could get a test scene with the SSS material? I’m having trouble setting one up myself, unless I’m missing something very obvious.

Ignore this, didn’t realize the Cornell Box scene had SSS

You’re a crazy smart man! Thanks you very much for sharing your renderer.

Very impressive work.

Glad to see Mitsuba is still under active development. The new shots are really amazing!

Beautiful and elegant renderer! I am jealous of your dev skills. Keep it up

I has same error as povmaniaco

in log “ERROR main [FileResolver] Could not detect the executable path!” after “DEBUG main [Thread] Spawning thread “wrk5″ ”

System: xp sp3 win32

OpenGL renderer : GeForce GTX 550 Ti

OpenGL version : 4.2.0

hi — the Windows XP incompatibility has been fixed — it should work in 0.4.1.

anyone have luck installing on Linux 64 bit?

I was able to install the 2 Collada debs but I got errors when trying to install the Mitsuba files. The Mitsuba dev file says “dependencies not satisfiable” and the other simply fails to connect.

I also looked on graphicall for prebuilt versions but of course there’s nothing there yet.

the Ubuntu package dependency issue has been fixed in 0.4.1.

Great work !! It’s simply AWESOME, period.

Same “ERROR main [FileResolver] Could not detect the executable path!”

Win xp32

Mitsuba currently does not work on XP — this will be fixed in the next release. Also, consider upgrading 😉

Lol, that’s my notebook (xp 32 bit) on my desktop Win7 64 bit works like a charm 😉

I got a crash with bi-dir integrator. With a basic scene created in Blender and exporte in .dae, standard path tracing works fine, but the bi-dir fails, it calcs the scene except the last bucket.

Here is a link where you can see the test (non crashing uni-dir PT)

http://blenderartists.org/forum/showthread.php?267979-Mitsuba-renderer-0-4-0-released!&p=2216683&viewfull=1#post2216683

That option is not easy in a country with 25% unemployment …

Wenzel, still getting crash on bi-dir integrator, mainly when i raise up amount of samples in “custom scenes” i build. matpreview works fine, not always though.

I always said “ok” to error report, hope you get info out of that.

Here are some basic tests i’m doing. With sunsky plugin i get really noisy/fireflies results with dielectric bsdf.

http://blenderartists.org/forum/showthread.php?267979-Mitsuba-renderer-0-4-0-released!&p=2216875&viewfull=1#post2216875

Hi Marco,

the issues in “bdpt” are due to a bug, which has already been fixed. It will work properly with the next release that will be released next week.

About the dielectric+sunsky issue: you have set up a scene that is extremely difficult for a path-tracing based algorithm to render. This is not something specific to Mitsuba — you would encounter the same problem if setting up this exact scene in other renderers. Basically the problem is that you have an intense light source that covers a very small set of directions (i.e. the sun), and it’s used to create caustic paths.

Currently, the only algorithms that can be used for things like this are the progressive photon mapping variants, primary sample space MLT (if you have lots of patience), and path-space MLT with manifold exploration.

Wenzel

Many thanks for clarifying Wenzel, can’t wait to play with stable bdpt. Doing some other tests in the same BA thread.

Keep the good work.

Regards

Mistake in the documentation on page 151: All parts of figure 32 have the same letter.

thanks!

Amazing.

Working pretty fine with the binaries provided for Arch x86_64… in a damned Optimus laptop.

Can’t wait to test this. Thanks for your hardwork.

———————-

The Force is strong with this one – Darth Vader

Hi, Wenzel. I found your work while I was searching the internet.

I skimmed over the documentation. It looks wonderfu. Thanks for the great work.

I have one question:

Which integrators handle both participating media and surfaces?

Some integrators have “warnings” saying that they do not support participating media. I

Moon Jung

thanks, I’m glad you like it. The integrators supporting participating media are: both volumetric path tracers, photon mapping (only homogeneous media), the particle tracer, and all bidirectional rendering techniques.

Hmm.. Your answers seem to imply that the integrators that support participating media AUTOMATICALLY support

surfaces in the volume as well. Right?

Moon Jung

Note that there are two different ways of rendering participating media.

What I said in my previous post only applies to the first category.

Thanks, Wuenzel. Very clear. Let me ask some more questions.

1. when the volume renders support surfaces, do they handle

light transmission through surfaces as well as light reflection?

2. How would you compare Mitsuba with PBRT renderer? What do Mitsuba which PBRT do not? Also how easy to use Mitsuba compared with PBRT, if you can comment on it?

Moon

Whoops, I forgot to respond to this question. In case it still matters:

1. Yes, the volume renderers jointly handle light transmission through surfaces *and* medium scattering.

2. How easy/convenient you find PBRT/Mitsuba is really mostly up to you, and what you’re looking for. The focus is obviously very different: because PBRT is simultaneously also a book, its implementations must be very compact and self-contained — I like it a lot, and the design of Mitsuba was partly influenced by it.

Mitsuba can spend considerably more space on various things. For example, its kd-tree construction and traversal code easily take up a few tens of thousands of lines of code, which could fill an entire book on its own. But this pays back in terms of rendering performance, so for Mitsuba this is the preferred tradeoff.

hello, i have a problem with mitsuba with linux ubuntu 12.10,

when i try to install it an error apar “Wrong architector AMD64″.

when i pass this line on terminal : uname -a i have this

Tue Oct 9 19:32:08 UTC 2012 i686 i686 i686 GNU/Linux

so i have 32 bit.

witch version of mitsuba is compatible.

thx

hi — sorry, there are only 64 bit builds available. You will have to use a 64 bit linux OS to run them (you are currently on 32 bit).